Cutting-edge performance for bleeding-edge workflows

��ҹѰ��’s high-performance file storage delivers the elasticity and power to support your most demanding cloud-based workflows, anywhere and everywhere

Disruptive technology at a disruptive price

In less than 15 minutes, ��ҹѰ��’s platform-agnostic, disaggregated architecture can give you the scale and performance flexibility that your most demanding workflows need, at a lower price point than any other cloud file service.

Flexible storage

- Deploy ��ҹѰ�� on-premises using your preferred hardware.

- Use Cloud Native ��ҹѰ�� on AWS or Azure for your biggest workloads.

- Exabyte-plus scalability in any local or cloud-based storage instance.

Elastic performance

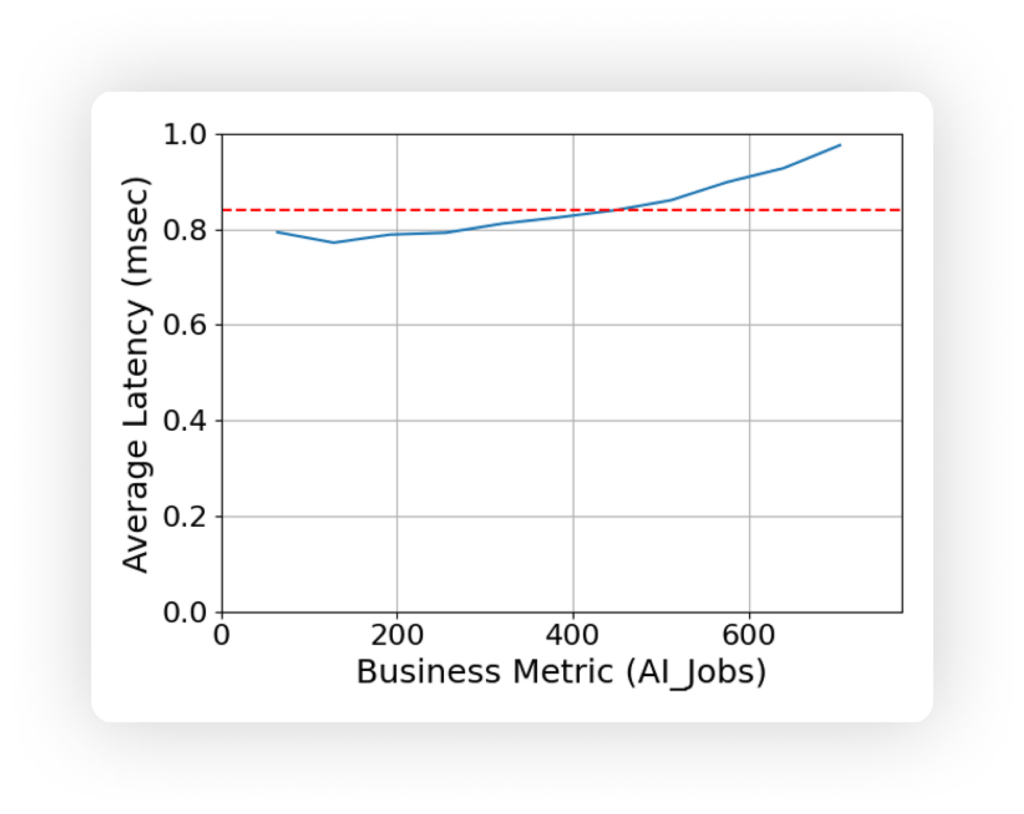

- Burstable performance for peak processing demands.

- Elastically scale throughput to 100GBps+ on any cloud instance.

- Elastically scale to 1M+ IOPS per instance in the cloud.

Unified data

- ��ҹѰ�� Cloud Data Fabric presents a unified data plane across all ��ҹѰ�� instances in all locations.

- Unique caching architecture enables low-latency access to remote data.

- NeuralCache prefetches data to the appropriate location before it’s needed.

The fastest file service in any cloud at a fraction of the cost

Cutting-edge performance for bleeding-edge workflows

��ҹѰ��’s high-performance file storage delivers the elasticity and power to support your most demanding cloud-based workflows, anywhere and everywhere

Full integration with cloud AI

Azure Native ��ҹѰ�� and Microsoft Copilot

Did you know that Microsoft Copilot can connect directly to Azure Native ��ҹѰ�� to read and analyze your Office files, PDF files, text files, and more? With ��ҹѰ��’s custom connectors, you can use Copilot to gain new intelligence and insight into virtually any type of unstructured data in your Azure Native ��ҹѰ�� environment.

“Our UC San Diego customers require… up to 200 Gbps of provisioned performance. The Data Science Machine Learning Platform runs on ��ҹѰ��, where thousands of students execute performance-sensitive AI workloads concurrently. These students require optimal configurations to ensure efficiency when running GPUs over thousands of NFS connections.”

Brian Balderston

Director of Infrastructure, San Diego Supercomputer Center

High-performance data access for your most demanding workflows

Whether in your data center, on AWS, or on Azure, ��ҹѰ�� delivers the exabyte-scale capacity and dynamically scalable performance to power your high-performance compute applications in the data center and in the cloud